Translate this page into:

Three iterative methods for solving second order nonlinear ODEs arising in physics

⁎Corresponding author. majeed.a.w@ihcoedu.uobaghdad.edu.iq (M.A. Al-Jawary)

-

Received: ,

Accepted: ,

This article was originally published by Elsevier and was migrated to Scientific Scholar after the change of Publisher.

Peer review under responsibility of King Saud University.

Abstract

In this work, three iterative methods have been implemented to solve several second order nonlinear ODEs that arising in physics. The proposed iterative methods are Tamimi-Ansari method (TAM), Daftardar-Jafari method (DJM) and Banach contraction method (BCM). Each method does not require any assumption to deal with nonlinear term. The obtained results are compared numerically with other numerical methods such as the Runge-Kutta 4 (RK4) and Euler methods. In addition, the convergence of the proposed methods is given based on the Banach fixed point theorem. The results of the maximal error remainder values show that the present methods are effective and reliable. The software used for the calculations in this study was Mathematica®10.

Keywords

Iterative methods

Approximate solution

Numerical solution

Runge-Kutta 4 method

Euler method

1 Introduction

The differential equations have many applications in science and engineering, especially in problems that have the form of nonlinear equations. The applications of nonlinear ordinary differential equations by mathematical scientists and researchers have become more important and interesting. It has been known that these equations describe different types of phenomena such as modeling of dynamics, heat conduction, diffusion, acoustic waves, transport and many others.

The iterative methods are often used to get the approximate solutions for the different nonlinear problems. A new iterative method has been presented in 2011 by Temimi and Ansari (TAM) (Temimi and Ansari, 2011) for solving nonlinear problems. The TAM was inspired from the homotopy analysis method (HAM) (Liao and Chwang, 1998), and used to solve several ODEs (Temimi and Ansari, 2015), PDEs and KdV equations (Ehsani et al., 2013); differential algebraic equations (DAEs) (AL-Jawary and Hatif, 2017); Duffing equations (AL-Jawary and Al-Razaq, 2016); some chemical problems (AL-Jawary and Raham, 2017), thin film flow problem (AL-Jawary, 2017) and Fokker-Planck’s equations (AL-Jawary et al., 2017).

The other proposed method has been proposed in 2006 by Daftardar-Gejji and Jafari, (DJM) (Daftardar-Gejji and Jafari, 2006) to solve nonlinear equations. Also, this method has been used to solve different equations such as fractional differential equations (Daftardar-Gejji and Bhalekar, 2008), partial differential equations (Bhalekar and Daftardar-Gejji, 2008); Volterra integro-differential equations and some applications for the Lane-Emden equations (AL-Jawary and AL-Qaissy, 2015), evolution equations (Bhalekar and Daftardar-Gejji, 2010). This method presented a proper solution which converges to the exact solution “if such solution exists” through successive approximations.

The other iterative method depends on the Banach contraction principle (BCP) (Daftardar-Gejji and Bhalekar, 2009) where it is another iterative method considered as the main source of the metric fixed point theory. The Banach contraction principle also known to be Banach's fixed point theorem (BFPT) has been used to solve various kinds of differential and integral equations (Joshi and Bose, 1985).

In this paper, the TAM, DJM, and BCM will be implemented to solve the nonlinear second order ODEs that arising in physics to get an approximate solutions. These solutions will be compared numerically with another results obtained by the Runge-Kutta and Euler methods to show the validity and the efficiency of the proposed iterative methods. The convergence for these presented methods is also discussed.

This paper is organized as follows: Section 2 reviews the basic ideas of the proposed iterative methods. Section 3 presents the convergence of the proposed methods. Section 4 illustrates the approximate solutions and the numerical simulation for several test problems. Finally, the conclusion has been given in section 5.

2 The basic concepts of three iterative methods

Iterative method is a mathematical procedure generates a sequence of improved approximate solutions for a class of problems. The iterative method leads to the production of an approximate solution which converges to the exact solution when the corresponding sequence is convergent at some given initial approximations.

Let us introduce the following nonlinear differential equation:

2.1 The basic idea for the TAM

We first begin by assuming that

is an initial guess to solve the problem

and the solution begins by solving the following initial value problem (Temimi and Ansari, 2011):

Then, the solution for the problem (1) with (2) is given by

2.2 The basic idea for the DJM

Let us apply the inverse operator

to the nonlinear problem presented by (1) and (2) we have

The solution

for Eq. (8) can be given by the following series (Daftardar-Gejji and Jafari, 2006):

2.3 The basic idea of the Banach contraction method (BCM)

Consider Eq. (8) as a general functional equation. In order to implement the BCM, we define successive approximations (Daftardar-Gejji and Bhalekar, 2009):

3 The convergence of the proposed iterative methods

In this section, we present the fundamental theorems and concepts for the convergence (Odibat, 2010) of the presented methods.

The iterations occurred by the DJM are straight used to prove the convergence. However, for the convergence proof of the TAM or BCM, the following procedure should be used for handling Eq. (1) with the given conditions (2). So, we have the terms

Let F defined in (18), be an operator from a Hilbert space H to H. The series solution converges if such that (such that ) .

This theorem is a special case of Banach’s fixed point theorem which is a sufficient condition to study the convergence.

See (Odibat, 2010). □

If the series solution is convergent, then this series will represent the exact solution of the current nonlinear problem.

See (Odibat, 2010). □

Suppose that the series solution

presented by (21) is convergent to the solution

. If the truncated series

is used as an approximation to the solution of the current problem, then the maximum error

is estimated by

See (Odibat, 2010). □

Theorems 4.1 and 4.2 state that the solutions obtained by one of the presented methods, i.e. the relation (5) (for the TAM), the relation (13) (for the DJM), the relation (15) (for the BCM), or (17), converges to the exact solution under the condition

such that

(that is

)

. In another meaning, for each i, if we define the parameters

4 Test problems

Problem 1:

The Painlevé equation I can be given by the following form (Hesameddini and Peyrovi, 2009)

Solving the problem 1 by the TAM:

In order to solve Eq. (24) by the TAM, we have the following form

Thus, we continue to obtain the approximations till for , but for brevity the terms are not listed.

Solving the problem 1 by the DJM:

Consider the Eq. (24) with initial conditions and .

Integrating both sides of Eq. (24) twice from

to

and using the given initial conditions, we can have

Solving the problem 1 by the BCM:

Consider Eq. (24), by following the similar procedure as given in the DJM, we get the Eq. (32). So, let and .

Applying the BCM, we obtain: We continue to get the approximations till , for brevity not listed.

It can be seen clearly that the obtained approximate solutions from the three proposed techniques are the same.

In order to access the convergence of the obtained approximate solution for problem 1, the relations given in Eqs. (17)–(21) will be used. The iterative scheme for Eq. (24) can be formulated as

By applying the TAM, the operator

as defined in Eq. (18) with the term

which is the solution for the following problem, will be then

On the other hand, one can use the iterative approximations directly when applying the DJM. Therefore, we have the following terms:

As presented in the proof of the convergence of the proposed methods, the terms given by the series

in (21) satisfy the convergent conditions by evaluating the

values for this case, we get

In order to examine the accuracy for the approximate solutions obtained by the proposed methods for Eq. (24) and since the exact solution is unknown, the maximal error remainder

will calculated. The error remainder function for problem 1 can be defined as

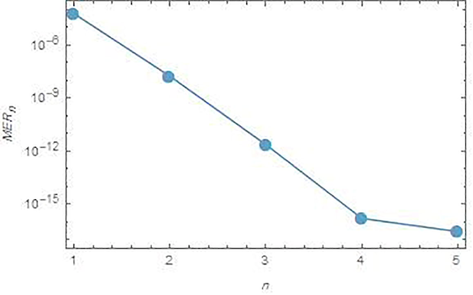

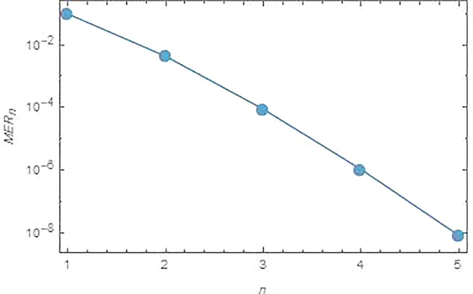

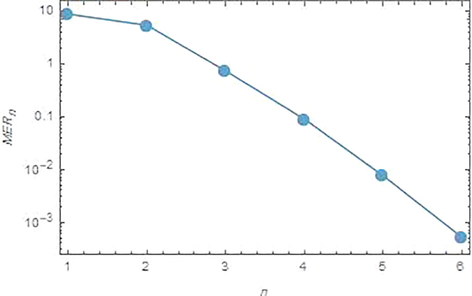

Fig. 1 shows the logarithmic plots for

of the approximate solution obtained by the proposed iterative methods which indicates the efficiency of these methods. It can be seen that by increasing the iterations, the errors will be decreasing.

Logarithmic plots for the

versus n from 1 to 5, for problem 1.

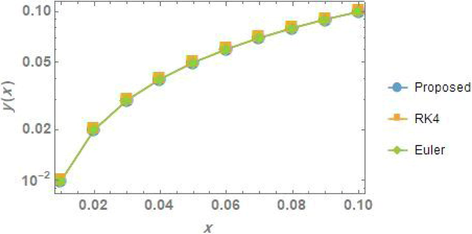

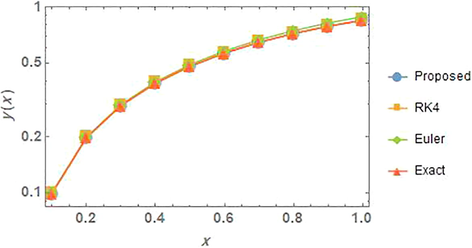

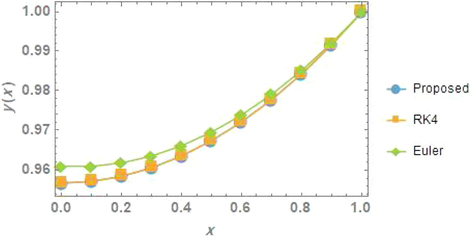

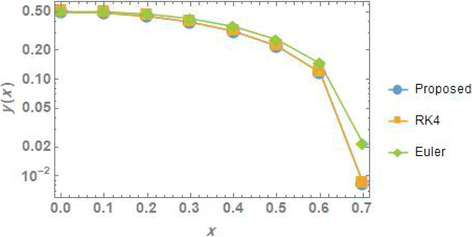

Also, we have made a numerical comparison between the solutions obtained by the proposed methods, the Range-Kutta (RK4) and Euler methods. The comparison for problem1 is given in Fig. 2. It can be seen, a good agreement has achieved.

The comparison of the solutions for problem 1.

Problem 2:

The Painlevé equation II given by the following form (Hesameddini and Latifizadeh, 2012)

Solving the problem 2 by the TAM:

In order to solve Eq. (39) by the TAM, we have the following form

Continuing in this manner to get approximations up to for , but for brevity they are not listed.

Solving the problem 2 by the DJM:

Consider the Eq. (39) with initial conditions and .

Integrate both sides of Eq. (39) twice from

to

with using the given initial conditions, we obtain

By applying the DJM, we get Therefore, we continue to get approximations till , for but for brevity the terms are not listed.

Solving the problem 2 by the BCM:

Consider Eq. (39), by following the similar way in the DJM, we get the Eq. (43). So, let and .

Applying the BCM, we obtain: We continue to get the approximations till , for brevity they are not listed.

The obtained solutions from the three proposed methods are same. Hence, as presented in the proof of the convergence for these methods in the previous section and by following similar procedure that presented for problem 1, the terms given by the series

in Eq. (21) satisfy the convergent conditions by evaluating the

values for each iterative method, we get

where, the values for and , are less than 1, so the proposed iterative methods are convergent.

Further investigation can be done, in order to examine the accuracy for the approximate solution for this problem; the error remainder function is evaluated:

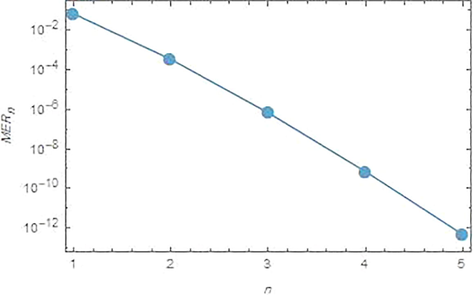

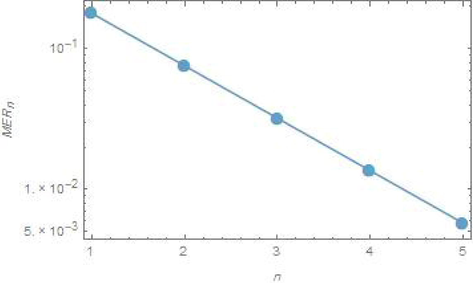

Logarithmic plots for the

versus n from 1 to 5, for problem 2.

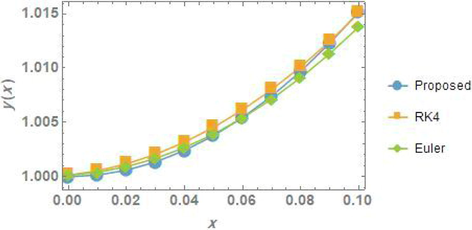

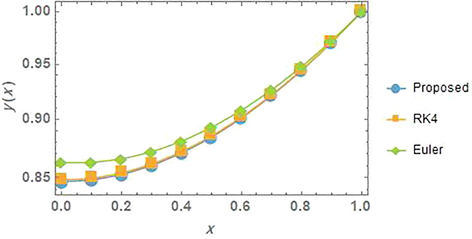

The numerical comparison between the solutions obtained by the proposed methods, the Range-Kutta (RK4) and Euler methods for problem1 is given in Fig. 4, and good agreement is clearly obtained.

The comparison of the solutions for problem 2.

Problem 3:

The pendulum equation presented by the form (Duan, 2011)

Solving the problem 3 by the TAM:

In order to solve the Pendulum equation given in Eq. (48) with the given conditions by the TAM, we have the following form

Solving the problem 3 by the DJM:

Consider the Pendulum equation given in Eq. (48) with the given conditions and .

Integrating both sides of Eq. (48) twice from

to

, we get

and reducing the integration in Eq. (51) from double to single (Wazwaz, 2015), we obtain

Solving the problem 3 by the BCM:

Consider Eq. (48), by applying the same way as in the DJM, we get the Eq. (52). So, let and .By applying the BCM, we obtain:

We continue to get the other approximations till , for brevity they are not listed.

The obtained solutions by the three proposed methods are equal to each other. Hence, as presented in the proof of the convergence in the previous section, the terms given by the series

in Eq. (21) satisfy the convergent conditions by evaluating the

values for each iterative method, we get

To examine the accuracy of the obtained approximate solution for this problem, the error remainder function is evaluated

Logarithmic plots for the

versus n from 1 to 5, for problem 3.

In addition, the numerical comparison between the solutions obtained by the proposed methods, exact solution, the Range-Kutta (RK4) and Euler methods for problem 3 is presented in Fig. 6. The agreement between the solutions can be clearly seen.

The comparison of the solutions for problem 3.

Problem 4:

The nonlinear reactive transport model is given in the following form (Ellery and Simpson, 2011):

Solving the problem 4 by the TAM:

In order to solve the Eq. (58) with the given initial conditions by the TAM, we have the following form

Also, can be found by solving the problem

with and , we get Continuing in this manner to get the fourth and fifth approximations but for brevity they are not listed.

Solving problem 4 by the DJM:

Consider the Eq. (58) with the initial conditions and .

Integrating both sides of Eq. (58) twice from

to x, we get

Solving the problem 4 by the BCM:

Consider Eq. (58), by followed the same way as in the DJM, we get the Eq. (62). So, let and .

By applying the BCM, we obtain:

The value of a is evaluated by using the given boundary condition

, so we have

. Now, we can find the

values in order to prove the convergence condition. Hence, the terms of the series

given in Eq. (21), we get

To examine the accuracy for the approximate solution for this problem; the error remainder function is calculated

Fig. 7 shows the logarithmic plots for

of the approximate solution obtained by the proposed iterative methods which indicates the methods are reliable and effective. Also, by increasing the iterations, the errors will be decreasing.

Logarithmic plots for the

versus n from 1 to 5, for problem 4.

Moreover, the numerical comparison between the solutions obtained by the proposed methods, the Range-Kutta (RK4) and Euler methods for problem 4 is presented in Fig. 8 and a good agreement can be clearly seen.

The comparison of the solutions for problem 4.

Problem 5:

The temperature distribution equation in a uniformly thick rectangular fin radiation to free space is given in the following form (Mohyud-Din et al., 2017)

The Eq. (66) will be solved by the three iterative methods with the initial conditions and , where the unknown constant a will be evaluated later from the given boundary condition . The parameter ε affects on the obtained solution will be seen numerically later.

Solving the problem 5 by the TAM:

In order to solve the Eq. (66) with the initial conditions by the TAM, we have the following form

with and .The solution will be Applying the same process for as follows We solve this problem, we get Continuing in this manner to get the other approximations till , but for brevity the evaluated terms are not listed.

Solving the problem 5 by the DJM:

Consider the Eq. (66) with the initial conditions and .

Integrating both sides of Eq. (66) twice from

to x, we get x

Solving the problem 5 by the BCM:

Consider Eq. (66), by applying the same procedure as in the DJM, we get the Eq. (70). So, let and .

Applying the BCM, we obtain:

We continue to get the approximations till , for brevity they are not listed.

The value of

is obtained by using the given boundary condition

for several values of a depends on the values of

. So, at

we get

. Now, we can find the

values in order to prove the convergence condition. Hence, the terms of the series

given in Eq. (21), we have

To examine the accuracy for the approximate solution for this problem; the error remainder function is

and the

is:

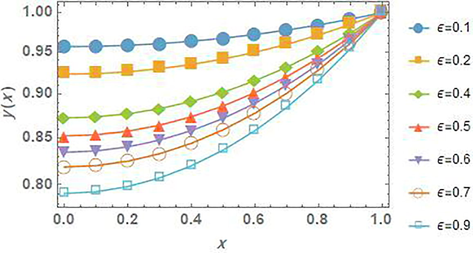

Fig. 9 shows the logarithmic plots for

of the approximate solution obtained by the proposed iterative methods, it can be seen that by increasing the iterations, the errors will be decreasing.

Logarithmic plots for the

versus n from 1 to 5, for problem 5.

The numerical comparison between the solutions obtained by the proposed methods, the Range-Kutta (RK4) and Euler methods for problem 5 is given in Fig. 10, once again a good agreement between the solution can be noticed.

The comparison of the solutions for problem 5.

Moreover, we have plotted the effect of

for different values given in Fig. 11.

The numerical affections of

values for problem 5.

Problem 6:

The motion equation for a system of mass with serial linear and nonlinear stiffness on a frictionless contact surface is presented by (Ganji and Babazadeh, 2009):

Solving the problem 6 by the TAM:

In order to solve Eq. (75) with the initial conditions by the TAM, we have the following operators

and .

Solving the initial problem (77), one can get

The first iteration can be found by solving

with and .

The solution will be Applying the same process for as follows

with and .

We solve this problem and then we have Continuing in this manner to get other approximations till ; but for brevity they are not listed.

Solving problem 6 by the DJM:

Consider Eq. (77) with the initial conditions and .

Integrating both sides of Eq. (77) twice from

to x, we get

By applying the DJM, we get Therefore, we continue to get approximations till for , but the terms are not listed.

Solving the problem 6 by the BCM:

Consider Eq. (75), by following similar way used in the DJM, we get the Eq. (79). So, let

and

.Applying the BCM, we obtain:

Now, the

values are evaluated in order to prove the convergence condition. Hence, the terms of the series

given in Eq. (21), we have

To examine the accuracy for the approximate solution for this problem; the error remainder function is

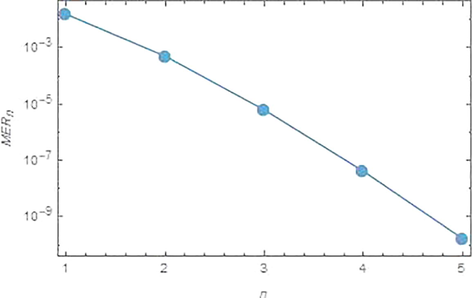

Fig. 12 shows the logarithmic plots for

of the approximate solution obtained by the proposed iterative methods, also, by increasing the iterations, the errors will be decreasing.

Logarithmic plots for the

versus n from 1 to 5, for problem 6.

The numerical comparison between the solutions obtained by the proposed methods, the Range-Kutta (RK4) and Euler methods for problem 6 is presented in Fig. 13.

The comparison of the solutions for problem 6.

In Table 1, the convergence rate (CR) for all problems was estimated by using the following formula

Methods

Problem 1

Problem 2

Problem 3

Problem 4

Problem 5

Problem 6

The CR

1.03613

1.10076

1.13879

1.

1.14431

1.05231

Linear convergent has been achieved in all methods i.e. .

5 Conclusion

In our study of this paper, we have successfully used the TAM, DJM and BCM to solve several problems contain nonlinear second order ODEs that arising in physics problems. Each solution has been obtained in a series form. Then we solved these problems by numerical methods which are the Rang-Kutta (RK4) and Euler methods. We compared the numerical results with approximate solutions and were in good agreement. We have used the calculations in this study by the aid of Mathematica®10.

Acknowledgement

The author would like to thank the anonymous referees, Managing Editor and Editor in Chief for their valuable suggestions.

References

- A semi-analytical iterative method for solving nonlinear thin film flow problems. Chaos, Solitons Fractals. 2017;99:52-56.

- [Google Scholar]

- A reliable iterative method for solving Volterra integro-differential equations and some applications for the Lane-Emden equations of the first kind. Monthly Not. R. Astronomical Soc.. 2015;448:3093-3104.

- [Google Scholar]

- A semi analytical iterative technique for solving Duffing equations. Int. J. Pure Appl. Math.. 2016;108(4):871-885.

- [Google Scholar]

- AL-Jawary, M.A., Hatif, S., 2017. A semi-analytical iterative method for solving differential algebraic equations Ain Shams Eng. J., (In Press).

- A semi-analytical iterative technique for solving chemistry problems. J. King Saud Univ.. 2017;29(3):320-332.

- [Google Scholar]

- A semi-analytical method for solving Fokker-Planck’s equations. J. Assoc. Arab Univ. Basic Appl. Sci.. 2017;24:254-262.

- [Google Scholar]

- Solving evolution equations using a new iterative method. Numer. Methods Partial Diff. Eq.. 2010;26(4):906-916.

- [Google Scholar]

- New iterative method: application to partial differential equations. Appl. Math. Comput.. 2008;203(2):778-783.

- [Google Scholar]

- Solving fractional diffusion-wave equations using a new iterative method. Fractional Calculus Appl. Anal.. 2008;11(2):193-202.

- [Google Scholar]

- Solving nonlinear functional equation using Banach contraction principle. Far East J. Appl. Math.. 2009;34(3):303-314.

- [Google Scholar]

- An iterative method for solving nonlinear functional equations. J. Math. Anal. Appl.. 2006;316(2):753-763.

- [Google Scholar]

- New recurrence algorithms for the nonclassic Adomian polynomials. Comput. Math. Appl.. 2011;62:2961-2977.

- [Google Scholar]

- An iterative method for solving partial differential equations and solution of Korteweg-de Vries equations for showing the capability of the iterative method. World Appl. Programming. 2013;3(8):320-327.

- [Google Scholar]

- An analytical method to solve a general class of nonlinear reactive transport models. Chem. Eng. J.. 2011;169:313-318.

- [Google Scholar]

- M. H. Jalaei,·H. Tashakkorian, Application of He’s variational iteration method for solving nonlinear BBMB equations and free vibration of systems. Acta Applicandae Mathematicae. 2009;106:359-367.

- [Google Scholar]

- Variational iteration method – a kind of non-linear analytical technique: some examples. Int. J. Non Linear Mech.. 1999;34:699-708.

- [Google Scholar]

- A reliable treatment of homotopy purturbation method for solving second Painlevé equations. Int. J. Res. Rev. Appl. Sci.. 2012;12(2):201-206.

- [Google Scholar]

- The use of variational iteration method and homotopy perturbation method for Painlevé equation I. Appl. Math. Sci.. 2009;3(38):1861-1871.

- [Google Scholar]

- Some Topics in Nonlinear Functional Analysis. John Wiley and Sons; 1985.

- Application of homotopy analysis method in nonlinear oscillations. J. Appl. Mech.. 1998;65(4):914-922.

- [Google Scholar]

- Optimal variational iteration method for nonlinear problems. J. Assoc. Arab Univ. Basic Appl. Sci.. 2017;24:191-197.

- [Google Scholar]

- A study on the convergence of variational iteration method. Math. Comput. Modell.. 2010;51(9–10):1181-1192.

- [Google Scholar]

- A new iterative technique for solving nonlinear second order multi-point boundary value problems. Appl. Math. Comput.. 2011;218(4):1457-1466.

- [Google Scholar]

- A computational iterative method for solving nonlinear ordinary differential equations. LMS J. Comput. Marhematics. 2015;18(1):730-753.

- [Google Scholar]

- A first course in integral equations. World Scientific Publishing Company; 2015.