Translate this page into:

Simple statistical tests selection based parallel computating method ensures the guaranteed global extremum identification

⁎Corresponding author. kovtun_v_v@vntu.edu.ua (Viacheslav Kovtun),

-

Received: ,

Accepted: ,

This article was originally published by Elsevier and was migrated to Scientific Scholar after the change of Publisher.

Abstract

The article proposes a parallel computing oriented method for solving the global minimum finding problems, in which continuous objective functions satisfy the Hölder condition, and the control parameters domain limited by continuous functions is characterized by a positive Lebesgue measure. A typical example of such a task is the discrepancy minimizing problem between the left and right parts of some large system of equilibrium equations (this is a usual situation when describing real process using Markov chain). The method is based on simple statistical tests, thanks to which, at each iteration, growing sets of potential global minima and sets of decrements necessary for estimating the values of the Hölder constants are formed. The article theoretically substantiates and empirically proves the guaranteed convergence of the authors’ method to the real global minimum, which occurs at an exponential rate. For the continuous iterations number, analytical upper estimates of the spacing between the potential global minima and real global minima are formalized, as well as an estimate of the probability of overcoming this spacing is formalized. The decrements sequence approximation, estimation of a priori unknown Hölder constants, estimation of the average number of iterations of the method and probabilistic characteristics of the final solution are analytically justified. In addition to the theoretical proof, the adequacy of the authors’ method has been confirmed empirically. It turned out that both the quality characteristics of the initial results calculated by the authors’ method and the time to obtain them are practically independent of the size of the search area. This expected result is a significant advantage of the authors’ method over analogues.

Keywords

Parallel computating

Applied mathematics

Optimization problem

Global extremum

Statistical tests method

Equilibrium

Nomenclature

-

is the dimension of the vector

-

is the volume of the set of stochastic vectors at the i-th iteration

-

is a set of stochastic, uniformly distributed , vectors

-

is a set of admissible stochastic vectors belonging to the admissible set (see (4)),

-

is the volume of the set

-

is the minimum of the objective function on the admissible set

-

is the cumulative set of potentially global minima

-

is the decrement of the set ,

-

is the cumulative set of decrements; ,

-

is the accumulated set of logarithms of decrements

-

is the accumulated set of logarithms of decrements of smaller ; ,

-

is the accumulated set of logarithms of the volumes of sets

-

is the maximum acceptable error for calculating the values of decrements

-

is the applied number of elements of the accumulated sequence

- ,

-

are estimates of the values of the Hölder constants (see expression (24))

-

is a situation when

-

is the upper estimate of the spacing from the current potentially optimal minimum , obtained on the -th iteration of the method, to the real minimum (see expression (33))

-

is the lower probability estimate (34)

1 Introduction

Optimization is the process of finding the point of the extreme value of a certain (target) function (Stracquadanio and Pardalos, 2019; McNaughton, 2023). It is one of the biggest cornerstones of applied mathematics, physics, engineering, economics, and industry. The scope of its application is vast and includes, for example, minimization of physical quantities at micro and macro levels, maximization of profit or efficiency of logistics chains, etc. Machine learning is also focused on optimization (Huang et al., 2022; Alexiadis, 2023): various regressions and neural networks try to minimize the discrepancy between predicted and real data.

Optimization is an active and relevant sector of scientific research. This sector includes a huge number of thematic fields and their extreme variations. The number of optimization problem statements and their solution methods has been growing like mushrooms after the rain for decades. For example, there is a huge field of Mixed-Integer Programming (Alfant et al., 2023; Zhang et al., 2023), which deals with discrete scenarios. Nondeterministic optimization (Zhang et al., 2022; Yang et al., 2019), based on stochastic principles, is known. There is robust optimization (Fransen and Langelaar, 2023; Castelli et al., 2023), in which fixed parameters are taken into account. The optimization of dynamic systems evolving in time (Yang et al., 2023; Xie et al., 2022) is known. There are various meta-heuristic methods (Marulanda-Durango and Zuluaga-Ríos, 2023; Hirsching et al., 2022): simulated annealing method, genetic algorithms, and swarm evolution methods. There are well-known approaches to optimization using fuzzy logic (Pei et al., 2023).

Let's focus on the global optimization problem of continuously differentiable functions (this will allow us to narrow the area of interest at least a little). The analysis involves evaluating the applicants in a certain quality criteria metric. In this context, first of all, we will mention such criteria as:

- global convergence (global convergence in optimization refers to the ability of an optimization algorithm to guarantee convergence to the global minimum or maximum of the objective function, regardless of the initial conditions. It ensures that the algorithm approximates the optimal solution across the entire search space, not just a local minimum or maximum. This property is crucial to avoid getting stuck in suboptimal solutions, ensuring the discovery of the best solution in the general case of the optimization problem);

- speed of local convergence (the speed of local convergence in optimization refers to the rate at which an optimization algorithm approaches the local minimum or maximum of the objective function near the convergence point. This characteristic determines how quickly the algorithm converges to the optimal solution in the vicinity of the current point, assessing the rate of decrease in the function value. A high speed of local convergence implies rapid approximation to the local optimum, which can be crucial for efficiently solving optimization problems);

- the dimension of the optimization problem, the solution of which is aimed at the method;

- the need to store matrices in the computer memory during the solution process (yes or no);

- use of the Hesse’s matrises in the solution process (yes or no);

- the need for scaling (yes or no) (scaling in optimization refers to the process of transforming variables or parameters in an optimization problem in such a way that they have a similar scale. This is important because different variables may have different orders of magnitude, which can hinder the convergence of optimization algorithms. Scaling helps balance the contribution of each variable, thereby facilitating the optimization process and enhancing the efficiency of converging to the optimal solution).

The first two criteria are analytical, the others characterize the aspects of the directly applied implementation of a particular method.

Let us pay closer attention to the first two of the mentioned criteria. An optimization method is said to have the property of global convergence if its iterations converge to a local minimizer regardless of the initial position. The speed of local convergence shows how quickly the method will find the minimizer after getting into the vicinity of the latter. Note that these two criteria are potentially competing. The so-called hybrid methods (Xu et al., 2023; Wu et al., 2023) are an actual answer to the conflict that manifests itself in an attempt to achieve simultaneous compliance with both the first and second criteria.

Now, having mentioned the main optimization method's qualitative criteria, we can go directly to the methods as such (at least, to the most used of them). Let’s recall the most used methods of smooth unconditional optimization (Ali et al., 2021; Smith and Nair, 2005): the lines method, the fastest descent method, the Newton method, quasi-Newton methods (incl, DFP, SR1, BFGS (Broyden, Fletcher, Goldfarb, Shanno) and its modifications: muffled, with limited memory, etc.), the nonlinear conjugate gradients method, the truncated Newton method. Note that from this list, only the fastest descent method and the truncated Newton method can claim (with limitations) to pass the first criterion (global convergence).

Of course, there are methods for which compliance with the first criterion was the dominant requirement at the creation time. We will distinguish three classes of such methods. The methods we refer to in the first class are focused on the configuration of the objective function and the admissible solutions domain. The most striking representative of this class is the DC functions minimizing method (Fang et al., 2012; Montano et al., 2022). In the method, both the objective function and the constraint functions for the admissible solutions domain are represented as the differences between two convex functions. A complete view of the evolution of this class of global optimization methods can be made by reading the works (Fang et al., 2012; Montano et al., 2022; Shilaja et al., 2022). Methods of the second class are focused on solving global optimization problems with simple configurations of admissible solutions regions (which have, for example, the shape of a parallelepiped) and objective functions characterized a priori by known Hölder constants (Chen and Zheng, 2021; Sovrasov, 2016). The same class can also include methods focused on reducing a multidimensional optimization problem to a one-dimensional one using Peano curves (Sovrasov, 2016). However, if the Hölder constants are unknown, the application of second-class methods is accompanied by considerable uncertainty. Finally, methods belonging to the third class focus on random search and the formalization of intellectualized heuristics to interpret its results (Gorawski et al., 2021).

We would like to separately mention Sub-gradient methods for non-smooth optimization (Tymchenko et al., 2019; Zaiats et al., 2019). Sub-gradient methods for non-smooth optimization are optimization techniques specifically designed to address problems where the objective function is not everywhere differentiable. Unlike traditional gradient methods applicable to smooth functions, sub-gradient methods use sub-gradients, a generalization of gradients, to navigate through optimization landscapes containing non-differentiable components. These methods are particularly useful in convex optimization scenarios involving functions with nonsmooth elements, such as those encountered in support vector machines and lasso regression. Sub-gradient methods iteratively adjust the solution in the direction of the sub-gradient, aiming to converge to a point where the sub-gradient approaches zero, indicating a potential solution to the non-smooth optimization problem. While they may exhibit slower convergence rates compared to methods for smooth optimization, sub-gradient methods are essential for effectively tackling problems with inherent nonsmoothness.

One of the essential problems accompanying the use of stochastic tests in the context of solving optimization problems is the generation of uniformly distributed random vectors in a certain region of the search space. Scientists are looking for ways to effectively apply this problem (Bisikalo and Kharchenko, 2023; Izonin et al., 2022). In this context, we note Hit-and-Run-like methods, Markov chains using methods, and methods that take into account relative entropy. However, a universal method has not yet been found.

Taking into account the strengths and weaknesses of the mentioned analogue methods, we will formulate the necessary attributes of scientific research.

Research object is a process of solving the global minimum finding problems, which continuous objective functions satisfy the Hölder condition, and the control parameters domain limited by continuous functions is characterized by a positive Lebesgue measure and is limited by multidimensional parallelepipeds.

Research subject includes functional analysis theory, probability theory and mathematical statistics, experiment planning theory, and computational methods.

Research aim is to formalize the process of guaranteed solutions to the optimization problems outlined by the research object in the form of a parallel computing oriented method.

Research objectives are:

- formalize the parallel computing oriented method of the guaranteed finding of the global solution of the optimization problem with the objective function and restrictions imposed by algorithmically calculated continuous functions that satisfy the Hölder condition (with a priori unknown values of the corresponding constants);

- formalize the process of generating sets of potentially global extrema as the results of simple statistical tests (as a basic element of each iteration of the method);

- formalize the probabilistic characteristics of the approximate solution, which is the result of the implementation of a finite number of iterations of the authors’ method;

- justify the adequacy of the proposed mathematical apparatus and demonstrate its functionality with an example.

Main contribution. The article proposes a parallel computing oriented method for solving the global minimum finding problems, in which continuous objective functions satisfy the Hölder condition, and the control parameters domain limited by continuous functions is characterized by a positive Lebesgue measure and is limited by multidimensional parallelepipeds. A typical example of such a task is the discrepancy minimizing problem between the left and right parts of some system of equilibrium equations. The method is based on simple statistical tests, thanks to which, at each iteration, growing sets of potential global minima and sets of decrements necessary for estimating the values of the Hölder constants are formed. The article theoretically substantiates and empirically proves the guaranteed convergence of the authors’ method to the real global minimum, which occurs at an exponential rate. For the continuous iterations number, analytical upper estimates of the spacing between the potential global minima and real global minima are formalized, as well as an estimate of the probability of overcoming this spacing is formalized. The decrements sequence approximation, estimation of a priori unknown Hölder constants, estimation of the average number of iterations of the method and probabilistic characteristics of the final solution are analytically justified.

- Statement of the research, which introduces general definitions and clarifies the purpose and objective of the research;

- Formalization of the parallel computing oriented method of the guaranteed finding of global extremum with simple statistical tests selection;

- Specific aspects of the applied application of the presented method;

- Sections devoted to demonstration and analysis of the results of the intended use of the proposed method.

2 Materials and methods

2.1 Statement of the research

The problem of finding the extremum of a function is to determine its largest (maximum) or smallest (minimum) value in a certain range of values of its arguments. The task of the boundaries of this area (as well as the rest of the additional conditions) is implemented in the form of a system of equations and (or) inequalities. In this case, we talk about the conditional problem of finding the extremum of a function or the problem of finding a local extremum. If the range of admissible values of the arguments is not limited, then we are dealing with the problem of finding the global extremum of the function or the optimization problem. Next, we will investigate the problem of finding the global extremum of the function

:

We formalize the canonical form of the optimization problem (1):

The functional relationship between the optimization problems (1) and (2) can be expressed through the continuously differentiable and mutually unique transformation

A functional instance of (3) is, for example, the transformation , , where and - -dimensional vectors are formed by the components and , respectively.

In the problems of applied design, the area of determination of controlled parameters x is not the entire space

, but its area is limited by the

-dimensional parallelepiped

. Let's redefine the optimization problem (1) taking into account this restriction:

Let the objective function of the optimization problem (4) acquire a global minimum at the values of the argument : . Taking into account the continuity of the transformation (3), we can state that the function is bounded from below by , that is, there is a constant a for which the inequality holds.

In the general case, a is unknown, but

in the context of specific optimization problems (for example, where the function

characterizes the discrepancy between the left and right parts of some system of equilibrium equations). At the same time, the function

also satisfies the Hölder condition, but with constants

and

, that is:

Therefore, the aim of our investigation is to create a parallel computing oriented method that will allow us to geting the global extreme of the objective function on the set . The subject of our research will be the simple statistical tests method.

2.2 Formalization of the parallel computing oriented method of the guaranteed finding of global extremum with simple statistical tests selection

The basic element of the authors’ method is the elementary positive cube

mentioned in the formulation of the optimization problem (4). Each i-th iteration

of the method begins with the generation of a set

, which contains

uniformly distributed and independent

stochastic vectors

. Next, among the calculated for the i-th set of the function

values,

, the minimum value

and the corresponding value of the argument

are determined and fixed. Considering that the generation of the set

is carried out by the statistical tests method, the determined optimal value

is stochastic. The transition to the (i+1)-th iteration is accompanied by a regulated increase in the number of stochastic vectors in the set

:

. The result of execution of the (i+1)-th iteration is the set

. The course of the iterative process is accompanied by the growth of accumulated sets

and

,

. The iterative process is completed if during

iterations the dynamics of changes in the values in the set

does not exceed the certain threshold

:

The output result of the method described above is the set , where is the found approximate value of the global extremum of the objective function (4), is the found approximate value of the argument of the objective function (4) for , is an estimate of the spacing between the approximate and actual values of the global extremum of the objective function (4), is an estimate of the event probability.

The set is formed from a matrix of independent stochastic values of dimension , the elements of which are uniformly distributed over the segment . The source of this matrix for the i-th iteration is a random number generator. This matrix is the basis for the formation of independent and uniformly distributed positive elementary parallelepiped stochastic vectors , . Let's examine the probabilistic characteristics of the set .

Let's form the desired set

from the existing stochastic matrix of dimension

in the following way:

or

, where the parameter

characterizes the number of stochastic vectors in the set

, and the parameter

characterizes the coordinates of

the vector

. Taking this

, we formulate the rule for the formation of the set

(taking into account the declared iterative increase in the volume of the latter:

):

Let us divide the

-dimensional parallelepiped

mentioned in (4) into unit cubes in each of the dimensions using a lattice with a uniform step

We characterize as

the probability of such an situation that there is an cube that does not contain any of the

vectors generated on the i-th iteration:

For

, expression (9) will take the form

Let’s justify analytically the correctness of expressions (9), (10) in the context of the optimization problem (4). Let us characterize by the probability the situation when none of the generated independent and uniformly distributed stochastic values falls into the interval for the k-th dimension: where is the upper limit of the number of segments formed as a result of dividing the interval by a grid with a step .

Considering that , the expression (10) can be transformed into the form .

Let us characterize B the situation when an

-dimensional elementary parallelepiped is found that does not contain any of the

generated vectors, i.e.:

. Since an independent set of stochastic values

is generated for each i-th dimension, then for

we get

Expression (11) is identical to the left side of expression (9). Therefore, with a sufficiently large number of iterations i, at least one of the stochastic vectors from the set generated on the i-th iteration of the method falls into each unit cube with a side with a probability not less than .

We established that for optimization problem (4), both the set of global minima v and the set of (their corresponding arguments) are not empty. Let us focus attention on the set of global minima and investigate its probabilistic characteristics.

Suppose,

be the set of arguments of the global estrema points of the function

on the unit cube. Let’s define the spacing

from a sufficiently compact set

to an arbitrary point

as

Suppose that the point

is the closest to the

in the context of the spacing (12). As we proved earlier, with probability

at least one of the points of the set

falls into the unit cube with side

. Taking into account this fact, we redefine the expression (12) for the point

:

. This expression characterizes the situation when the point

is located in the center of the unit cube, and the point

is located at one of its vertices. Accordingly:

, where

is the modulus of continuity of the function

characterized by expression (5). On the other hand:

. Let's generalize the last two expressions and get

or

Inequality (13) is fulfilled with a probability not less than

. Relying on inequality (13), it can be asserted that for the function of the form (5) there are such positive constants

,

that the estimate

Operating with probabilistic characteristics (10), (14) it is possible to substantiate the convergence of the parallel computing oriented method proposed at the beginning of Section 2.2. Let's take a sufficiently small positive number

and find the value of the parameter

(the value of the estimate

is determined according to (14)). For

, the situation

is relevant. The probability of realization of this situation in the context of expressions (9), (14) is characterized by the expression

Based on expression (7),

,

we write

We present the estimate of the probability characteristic (9) as

Considering (16), we note that in expression (17) the third multiplier is greater than the second in terms of value. Taking this into account, as well as

, we present the expression (17) in a compact form:

, where

. The result allows for

to estimate the probability (15) as

Examining the limiting values of (19) for sufficiently small positives

, we obtain

Expression (20) can be interpreted as a necessary and sufficient condition for the convergence of the set , . Expression (19) allows us to state that the speed of this convergence is exponential.

Based on the proven conclusion regarding the convergence of the to the global extrema of the optimization problem (4) value, it can be predicted that the set of arguments is guaranteed to converge to the set in the spacing metric (12). Suppose that there is a subset that converges to the point with non-zero probability. This (considering the continuity of the function) means that the inequality holds with nonzero probability. But, relying on (20), we can write: . In the resulting contradiction, it is the second construction that is analytically justified (see the sequence of obtaining expression (20)). Therefore, the hypothesis that the set of arguments is guaranteed to coincide with the set in the spacing metric (12) can be considered proven. Analyzing expression (20), we can conclude that the set of decrements introduced at the beginning of Section 2.2 converges to zero.

2.3 Specific points of applied use of the authors’ method

The iterative process of solving the optimization problem (4) by the authors’ method is accompanied by both the calculation of the elements of the set of decrements and the determination of the constants and (to comply with the Hölder condition (5)). Let us approximate the sequence of decrements and formalize the process of estimating the values of the constants , .

We adapt condition (14) for functions that satisfy the Hölder condition. For the i-th iteration, we get

Subtract expression (22) from expression (21) taking into account rule (7) and that : .

After differentiating the obtained expression, we write

Denoting

,

,

, we present the expression (23) as

The constants

,

are related to the parameters

,

using expression (24). Estimates

can be determined by the “Least Squares” method based on the current values of the elements of sets

,

. At the same time, we take into account

, where

is the base of the logarithm. Considering the determined estimates of the constants

,

, we present expression (21) as

Expression (25) allows us to estimate the spacing to the exact global extrema value, taking into account the unit cube volume o, characteristic of the i-th iteration of the parallel computing oriented method.

Now let's analytically estimate the average number of iterations until the realization of the situation

when condition (6) is fulfilled. Suppose that this situation is realized on the ϕ-th iteration. Let's introduce a positive integer variable

for which

. For the situation

, we can write

, based on which we will get

Let us present expression (24) in the context of the Bienaime inequality:

Substitute expression (27) into expression (26) and get

Based on the terminology used, we express the average number of iterations of the parallel computing oriented method before the event

occurs as

Let's open the expression (29) for

:

Taking expression (28) into account, we write:

Consider inequality (31) when passing to the limiting form of expression (30) for

:

Expression (32) is an estimate of the average number of iterations of the method before it stops at the ϕ-th iteration. Assume that condition (32) is fulfilled. Based on expressions (14), (18), we can conclude that with a sufficiently large , probability that at least one of the values of , , …, is not in the vicinity of the exact global minimum of the optimization problem (4) tends to zero when the situation arises.

When the situation

occurs on the ϕ-th iteration, it is possible to calculate the estimates

,

using expression (25). In turn, these estimates can be used to estimate the spacing of the found global minimum

from the exact value

. Taking into account expressions (14), (25), we write:

Taking this into account, we characterize the lower limit of the probability of occurrence of the situation

from expression (6) as

3 Results

The authors’ method is focused on finding the global minimum of the optimization problem (4), which is an adaptation of the canonical form of the optimization problem (2). We algorithmically implement the method as a composition of two functions. The function implements the generation of a set of local minima and a set of decrements , as well as checking the fulfilment of the stop condition (6). The function implements the quality check of the found extrema using the probabilistic characteristic (34), which allows for determining the global minimum. The search for the extremum is implemented iteratively, and at each i-th iteration, computational operations are performed. For the convenience of readers, all variables used below are presented in the Nomenclature at the end of the article.

At each i-th iteration of the application of the function , the following actions are performed:

1. Using the stochastic tests method a pool of stochastic vectors , is formed;

2. From the pool , stochastic vectors belonging to the admissible set are selected. As a result, a set with capacity is formed;

3. The values of the objective function (4) on the set of admissible points are calculated: , ;

4. From the values calculated at stage 3, the smallest one is chosen: ;

5. The decrement and derived parameters , are calculated.

Stages 1–5 are repeated for iterations (as long as the condition is fulfilled). After performing iterations, the function completes its work. The output parameter of the function is a tuple of storage sets of capacity each. Next, the function comes into action, which for the input tuple implements the following structured calculation procedure:

1. Estimates , are calculated for sets , by the least squares method (see expression (24));

2. Such quality indicators of the found solution as the spacing (see expression (33)) and the probability characteristic (see expression (34)) are calculated.

The algorithmic constructions described above were implemented in the Python programming environment with a focus on supporting GPU Programming with Python and CUDA parallel computing technologies, the description of which is freely available at the link https://github.com/PacktPublishing/Hands-On-GPU-Programming-with-Python-and-CUDA. The created software was based on a software platform consisting of Windows, Python 2.7, Anaconda 5, CUDA 10 (incl. cuBLAS, cuSolver), PyCUDA, Scikit-CUDA. The hardware configuration of the test bench included a 64-bit Intel CPU, 32 Gigabytes of RAM, NVIDIA GPU RTX 3070.

The plan of experiments is designed to empirically confirm the adequacy of the presented method, as well as to obtain data for evaluating its effectiveness.

Empirical confirmation of the adequacy of the authors’ method was carried out based on the content of the Optimization Test Problems section of the specialized resource Virtual Library of Simulation Experiments: Test Functions and Datasets, freely available at the link https://www.sfu.ca/∼ssurjano/optimization.html.

Among the instances available in the Optimization Test Problems section, we selected ten optimization problems, the of admissible solutions domains of which are limited by -shaped parallelograms. Here is a list of selected problems with the values of the corresponding controlled parameters and the output results , in the context of the terminology used in the article:

T1: . For , , , we have: , ;

T2: . For we have: , ;

T3: . For we have: , ;

T4: We have: , ;

T5:

We have: , ;

T6: We have: , ;

T7: . We have: , ;

T8: . For , , , we have: , ;

T9: . For , , we have: , ;

T10: . For we have: , .

Test problems T1-T10 were solved using the authors’ method with the following initial data:

,

,

,

,

,

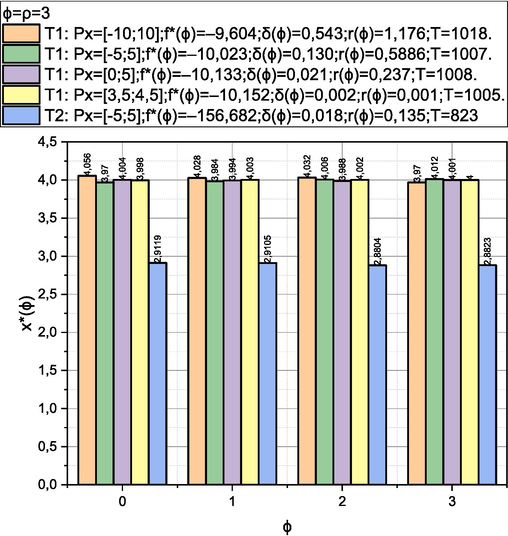

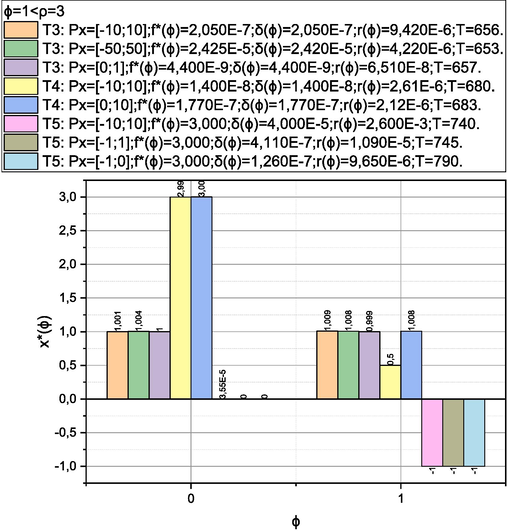

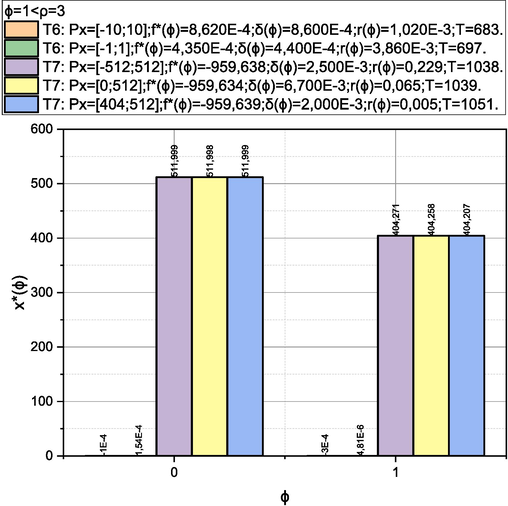

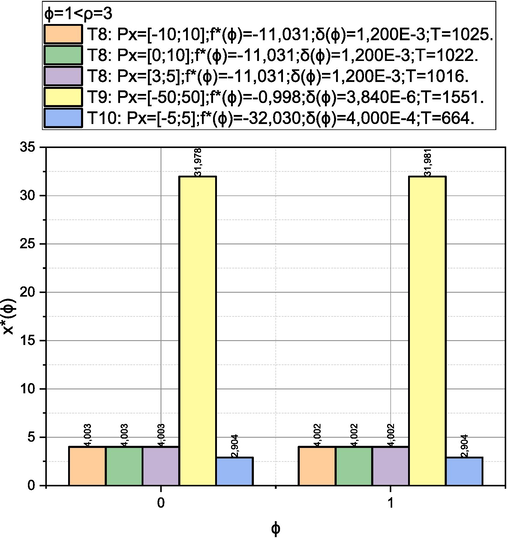

. The obtained empirical solutions are presented below in graphical and tabular form in Figs. 1-4. The results of the calculations are presented in the metric

, where

is the optimal value of the corresponding optimization problem found on the ϕ-th iteration;

- the value of the argument, which corresponds to the value of

;

- quality assessment (21);

is the time spent searching for the value of

.

Results of solving test problems T1, T2 by the authors’ method.

Results of solving test problems T3, T4, T5 by the authors’ method.

Results of solving test problems T6, T7 by the authors’ method.

Results of solving test problems T8, T9, T10 by the authors’ method.

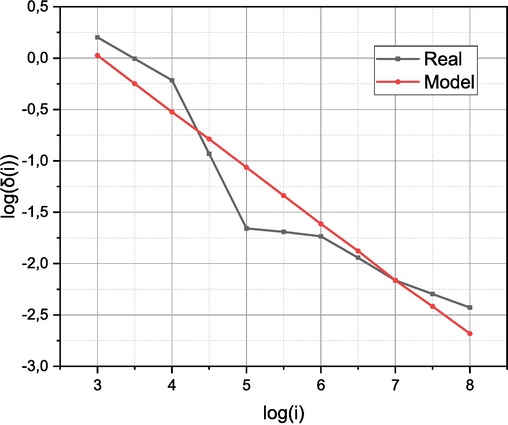

Let's finish the experimental part by checking the adequacy of the model embodied in the expression (24). Fig. 5 presents the results of a computational experiment to identify the functional dependence of

at

.

Real and simulated functional dependence of

at

.

According to the results presented in Fig. 5, the parameters and the root mean square deviation of the approximation (21) were determined by the least squares method. Note that the calculated root mean square deviation turned out to be smaller , which allows us to consider model (24) as adequate.

4 Discussion

Let's start the discussion by stating the empirically proven fact of the adequacy of the proposed method for solving the global optimization problems, in which continuous objective functions satisfy the Hölder condition, and the control parameters domain limited by continuous functions is characterized by a positive Lebesgue measure and is limited by multidimensional parallelepipeds. This fact is confirmed by the results of comparing the solutions obtained using the authors’ method with etalon solutions of optimization problems selected from the Optimization Test Problems section of the specialized resource Virtual Library of Simulation Experiments: Test Functions and Datasets. Figs. 1-4 shows that for all optimization test problems T1-T10, approximate values of the global minimum with an error in the range of were found. Moreover, for test problems, T1-T7, adequate values of the upper estimates of the spacing to the exact global minimum (metric ) with a probability close to one were obtained. Separately, we note that both the quality characteristics of the initial results calculated according to the authors’ method and the time to obtain them turned out to be practically independent of the size of the search area. This expected result (provided by a selection of simple statistical tests performed on iterations of the method) is a significant advantage of the authors’ method over analogues.

We will also note several theoretically grounded remarks concerning the authors’ method. In particular, the values of estimate (14) depend on the value of the established parameter , which is associated with the selected value of the unit cube (see (8)). The fact that the set is guaranteed to converge with an exponential rate is theoretically confirmed by the guaranteed convergence of the strictly monotonic set to the value of the exact global extrema with an exponential rate. Recall that the cumulative set grows with each transition from the i-th to the i+1-th iteration of the authors’ method (see the beginning of Section 2.2). Finally, if the admissible set is part of a unit cube (4), then when evaluating the probabilistic characteristics mentioned in Section 2.3, only the number of unit cubes in the partition of the admissible set will change. Accordingly, the estimates of the probabilistic characteristics from Section 2.3 (as well as the statements regarding the convergence of the authors’ method) are valid under the condition of compactness (4), which is characterized by the positivity of the Lebesgue measure. With this in mind, when implementing the authors’ method, at each iteration, you should check whether each stochastic point belongs to an admissible set.

5 Conclusion and future directions

The article proposes a parallel computing oriented method for solving the global minimum finding problems, in which continuous objective functions satisfy the Hölder condition, and the control parameters domain limited by continuous functions is characterized by a positive Lebesgue measure and is limited by multidimensional parallelepipeds. A typical example of such a task is the discrepancy minimizing problem between the left and right parts of some system of equilibrium equations. The method is based on simple statistical tests, thanks to which, at each iteration, growing sets of potential global minima and sets of decrements necessary for estimating the values of the Hölder constants are formed. The article theoretically substantiates and empirically proves the guaranteed convergence of the authors’ method to the real global minimum, which occurs at an exponential rate. For the continuous iterations number, analytical upper estimates of the spacing between the potential global minima and real global minima are formalized, as well as an estimate of the probability of overcoming this spacing is formalized. The decrements sequence approximation, estimation of a priori unknown Hölder constants, estimation of the average number of iterations of the method and probabilistic characteristics of the final solution are analytically justified. In addition to the theoretical proof, the adequacy of the authors’ method has been confirmed empirically. It turned out that both the quality characteristics of the initial results calculated by the authors’ method and the time to obtain them are practically independent of the size of the search area. This expected result is a significant advantage of the authors’ method over analogues.

Further research is planned to be devoted to the applied use of the proposed method, in particular, in the field of analysis of small stochastic data. The theoretical basis for these studies are articles such as (Kovtun et al., 2024; Kovtun et al., 2023).

Funding

This work was funded by Researchers Supporting Project number (RSP2024R503), King Saud University, Saudi Arabia.

Acknowledgments

The authors are grateful to all colleagues and institutions that contributed to the research and made it possible to publish its results.

References

- A minimalistic approach to physics-informed machine learning using neighbour lists as physics-optimized convolutions for inverse problems involving particle systems. J. Comput. Phys.. 2023;473:111750

- [CrossRef] [Google Scholar]

- Evaluating mixed-integer programming models over multiple right-hand sides. Oper. Res. Lett. 2023

- [CrossRef] [Google Scholar]

- Ali, H., Tariq, U. U., Hardy, J., 2021. Bensaali, F.; Amira, A.; Fatema, K.; Antonopoulos, N. A Survey on System Level Energy Optimisation for MPSoCs in IoT and Consumer Electronics. Comput. Sci. Rev., 41, 100416. 10.1016/j.cosrev.2021.100416.

- Parameterization of the stochastic model for evaluating variable small data in the Shannon entropy basis. Entropy. 2023;25:184.

- [CrossRef] [Google Scholar]

- Robust optimization of seasonal, day-ahead and real time operation of aggregated energy systems. Int. J. Electr. Power Energy Syst.. 2023;152:109190

- [CrossRef] [Google Scholar]

- Multi-objective optimization for pavement maintenance and rehabilitation decision-making: a critical review and future directions. Autom. Constr.. 2021;130:103840

- [CrossRef] [Google Scholar]

- Asymptotic closure condition and Fenchel duality for DC optimization problems in locally convex spaces. Nonlinear Anal. Theory Methods Appl.. 2012;75:3672-3681.

- [CrossRef] [Google Scholar]

- Fransen, M. P., Langelaar, M., 2023. Schott, D. L. Deterministic vs. Robust Design Optimization Using DEM-Based Metamodels. Powder Technology, 425, 118526. 10.1016/j.powtec.2023.118526.

- Energy minimization algorithm for estimation of clock skew and reception window selection in wireless networks. Sensors. 2021;2021(21):1768.

- [CrossRef] [Google Scholar]

- Meta-heuristic optimization of control structure and design for MMC-HVdc applications. Electr. Pow. Syst. Res.. 2022;213:108371

- [CrossRef] [Google Scholar]

- Problem-independent machine learning (PIML)-based topology optimization—A universal approach. Extreme Mech. Lett.. 2022;56:101887

- [CrossRef] [Google Scholar]

- Two-step data normalization approach for improving classification accuracy in the medical diagnosis domain. Mathematics. 2022;10:1942.

- [CrossRef] [Google Scholar]

- Stochastic forecasting of variable small data as a basis for analyzing an early stage of a cyber epidemic. Sci. Rep.. 2023;13

- [CrossRef] [Google Scholar]

- Entropy-metric estimation of the small data models with stochastic parameters. Heliyon. 2024;10:e24708.

- [Google Scholar]

- A meta-heuristic optimization-based method for parameter estimation of an electric arc furnace model. Results Eng.. 2023;17:100850

- [CrossRef] [Google Scholar]

- McNaughton, S., 2023, Optimizing Learning: An Overview. International Encyclopedia of Education(Fourth Edition), 560–567. 10.1016/b978-0-12-818630-5.14065-5.

- Application of the arithmetic optimization algorithm to solve the optimal power flow problem in direct current networks. Results Eng.. 2022;16:100654

- [CrossRef] [Google Scholar]

- Enriched global-local multi-objective optimization scheme for fuzzy logic controller-driven magnetorheological damper-based structural system. Mech. Syst. Sig. Process.. 2023;193:110267

- [CrossRef] [Google Scholar]

- Design and analysis of global optimization methods for proton exchange membrane fuel cell powered electric vehicle system with single switch DC-DC converter. Mater. Today:. Proc.. 2022;52:2057-2064.

- [CrossRef] [Google Scholar]

- Local tuning in peano curves-based global optimization scheme. Procedia Comput. Sci.. 2016;101:27-34.

- [CrossRef] [Google Scholar]

- Stochastic methods for global optimization and problem solving. Encyclopedia Bioinformat. Computat. Biol.. 2019;321–327

- [CrossRef] [Google Scholar]

- Methods of converting weight sequences in digital subtraction filtration. In: 2019 IEEE 14th International Conference on Computer Sciences and Information Technologies (CSIT). 2019.

- [CrossRef] [Google Scholar]

- A hybrid polynomial-based optimization method for underwater gliders with parameter uncertainty. Appl. Ocean Res.. 2023;133:103486

- [CrossRef] [Google Scholar]

- A new hybrid optimizer for stochastic optimization acceleration of deep neural networks: dynamical system perspective. Neurocomputing. 2022;514:341-350.

- [CrossRef] [Google Scholar]

- A hybrid method for optimization of frame structures with good constructability. Eng. Struct.. 2023;276:115338

- [CrossRef] [Google Scholar]

- Rigorous modelling and deterministic multi-objective optimization of a super-critical CO2 power system based on equation of state and non-linear programming. Energ. Conver. Manage.. 2019;198:111798

- [CrossRef] [Google Scholar]

- Dynamic flexibility optimization of integrated energy system based on two-timescale model predictive control. Energy. 2023;276:127501

- [CrossRef] [Google Scholar]

- An approach to assessment of the value and quantity of information in queueing systems based on pattern recognition and fuzzy sets theories. Cybern. Syst. Anal.. 2019;638–648

- [CrossRef] [Google Scholar]

- A coupled non-deterministic optimization and mixed-level factorial analysis model for power generation expansion planning – a case study of Jing-Jin-Ji metropolitan region, China. Appl. Energy. 2022;311:118621

- [CrossRef] [Google Scholar]

- A survey for solving mixed integer programming via machine learning. Neurocomputing. 2023;519:205-217.

- [CrossRef] [Google Scholar]