A novel pixel’s fractional mean-based image enhancement algorithm for better image splicing detection

⁎Corresponding author. hamidjalab@um.edu.my (Hamid A. Jalab)

-

Received: ,

Accepted: ,

This article was originally published by Elsevier and was migrated to Scientific Scholar after the change of Publisher.

Peer review under responsibility of King Saud University.

Abstract

Image manipulation has become widely accessible to the masses over the past years due to the sophisticated image editing tools which are readily-available and easy to use. As a result, image forgery has increased such that it has become infeasible to discriminate authentic from tampered images with the naked eye. Image forgery plays a prominent role in the spread of misinformation, which might be criminalized under certain jurisdictions. Image splicing is a common type of image manipulation and constitutes one of the most widespread image tampering methods on the internet. Efforts have been made to tackle the implications of image forgery by developing computer algorithms that can discriminate tampered images, however, more research is needed to keep up with the advancements of image editing tools. Previously, we have explored fractional calculus in other image processing applications. In this study, we propose a novel pixel’s fractional mean (PFM) algorithm to enhance images prior to classification for better detection of image splicing forgery based on texture features. The proposed PFM enhances each pixel separately depending on the occurrence number of the pixel’s intensity. Two sets of texture algorithms are used to extract essential features from suspected spliced images. These features are then used with the “support vector machine” (SVM) classifier to classify authentic and spliced images. The proposed model demonstrated an accuracy rate of 97% when evaluated with the publicly-available image splicing dataset “CASIA v2.0”. With a relatively low dimension feature vector, the proposed model demonstrates high accuracy and efficiency, which corroborate the benefit of using fractional calculus in image processing algorithms.

Keywords

Image splicing

Fractional differential

Texture features

Support vector machine

1 Introduction

Identifying tampered images has been one of the primary challenges in image forensics research ever since the conception of digital image editing software. The field of image forensics deals with the detection and analysis of image features that may reveal the authenticity of the image. Image editing tools have boomed over the past decade due to increased demand for image enhancements and due to the increased computational power of personal devices. Such tools are now available on personal computers, mobile devices, and online, and offer different features according to each type of software. Generally, they facilitate image tampering through processes such as splicing, color enhancements and manipulation, spot-healing, blurring, and many others. Even though the implications of image forgery are outside the scope of this discussion, it is noteworthy that the act of image manipulation can be used for malicious purposes which may lead to deception and the spread of misinformation. Thus, there is always a need to develop new algorithms that can keep up with the advancements of image editing tools.

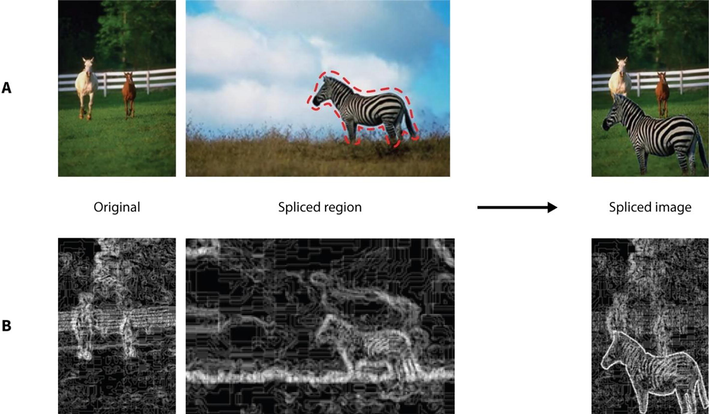

Image splicing is a typical image manipulation method that involves combining and merging parts from different images to generate a composite forged image, as illustrated in Fig. 1 (A). The generated forged image will have a disturbed pattern due to the insertion of the spliced region from another image. This manifests as artifacts that can be perceived as inconsistencies across the image. These artifacts can be exploited to design algorithms to detect the splicing operation. For instance, the texture of an image becomes distorted following the splicing operation. Since the parameters of textures are mathematical representations of the features of the image, this enables algorithms to detect splicing manipulation through texture features. Fig. 1 (B) presents examples of how the image texture is distorted following the splicing operation. The motivation to propose image splicing is that the textural features are directly influenced by the splicing process by providing the quantitative basis to discriminate between authentic and spliced images.

- Example of image splicing and the texture distortion that occurs due to the manipulation. (A) Shows the basic process of splicing (B) shows the change in the texture features which can facilitate splicing detection.

The image is processed in image forensics using two basic detection methods: active and passive. (Al-Azawi et al., 2021; Sadeghi et al., 2018). The inclusion of additional information introduced into the image prior to distribution, such as in the case of digital watermarking, is known as active detection. (Kapse et al., 2018). Passive detection, on the other hand, uses statistical methods to identify changes in an image's features (El-Latif et al., 2019). Over the years, many passive algorithms for the detection of image splicing have been proposed. For feature extraction, these algorithms use a variety of algorithms, including the “Local Binary Pattern” (LBP), Markov model, and deep learning. For instance, Zhang et al. (2015) proposed image splicing detection algorithm that is based on the “Discrete Cosine Transform” (DCT) and LBP. In this algorithm, the input image, which is colored, is divided into multi-sized blocks. To each of these blocks, the DCT is applied, and for each block, LBP is used to extract features. The obtained LBP histograms are then linked together to generate the feature vector which is used with SVM for the classification of spliced images. Despite the demonstrated accuracy of this method, LBP can be impeding when dealing with the edges of an image. Alternatively, for the second type, the work presented in (Shi et al., 2007) uses statistics-based feature extraction methods to create a splicing detection algorithm. In this algorithm, the authors utilized transition probabilities of Markov and wavelet sub-bands moments of characteristic functions. This approach reportedly achieved a detection accuracy of 91.8%. Similarly, a splicing detection algorithm has been proposed in (Zhang et al., 2009) based on the merging of the features of Markov and DCT and 109-D feature vector. This algorithm managed to achieve an accuracy rate of 91.5%.

As for the third type, a deep learning-based algorithm for universal use in image forensics has been proposed in (Bayar and Stamm, 2016). This algorithm utilizes a CNN with a novel convolutional layer that can learn features related to image manipulation as opposed to the classical approach that learns features related to the contents of an image. In that sense, the algorithm needs neither preprocessing nor a set of pre-selected features to detect image manipulations. The approach managed to detect different forms of image manipulations with an accuracy of 99.10%. A similar deep-learning based algorithm has been proposed in (El-Latif et al., 2019). However, this algorithm employs PCA to reduce features dimension, which makes it more efficient compared to the one proposed in (Bayar and Stamm, 2016). In (Rao et al., 2020), local feature descriptor-based image splicing detection algorithm utilizing a two-branch CNN is proposed. This algorithm reportedly achieved an accuracy rate of 96.97% with the SVM classifier and the “CASIA V2.0” dataset.

It can be concluded from the above discussion that the types of features which are extracted from input images play a key role in shaping the overall performance of the detection and classification process.

Along the same lines, preprocessing is often employed to enhance the details of the input images to make it easier to extract essential information from the image. Preprocessing steps generally aim to improve the contrast and reduce the loss of details such that the output image would contain better details for feature extraction. Preprocessing may significantly improve the performance of the classification algorithm due to its direct effect on the image features. Therefore, in this study, we present a new pixel’s fractional mean (PFM) algorithm to enhance the quality of input images in the preprocessing step to obtain better detection accuracy of spliced images. The proposed PFM aims to preserve low-frequency features in smooth areas of the image while still enhancing texture details without changing gray level.

Many fractional calculus-based image enhancement techniques have been used to improve the contents of an image for various applications. A Riesz fractional model-based image enhancement model was proposed for enhancing text images in license plate images (Raghunandan et al., 2018). Another approach for the image enhancement was proposed by (Roy et al., 2016) based on fractional Poisson for enhancing text within video. These two image enhancement methods are not robust enough in the case of low contrast text, because these two methods are only enhancing the text edges. Moreover, an image enhancement model based on adaptive fractional differential mask was proposed by (Zhang and Yan, 2019). This approach used image segmentation as well as the fractional order differential mask approach for image enhancement.

Recently, (Ibrahim et al., 2022) presented a new fractional partial differential (FPDE) model with some geometric functions for enhancing medical images. In this study the of FPDE model enhanced the image intensities with efficiently.

Our proposed PFM is based on fractional calculus, which has applications in various physical and engineering disciplines. Previously, we have utilized fractional calculus in a number of image processing algorithms, such as image denoising (Jalab, 2021; Jalab et al., 2017; Jalab et al., 2019; Moghaddasi et al., 2014), where we were able to demonstrate the benefit of using fractional calculus in the aforementioned applications. Here, we aimed to evaluate whether using it as part of the preprocessing step of image splicing detection model would yield favorable outcomes. The main contributions of present image splicing detection study are:

-

Propose a novel pixel’s fractional mean image enhancement model for better image splicing forgery detection.

-

Propose an efficient algorithm for image splicing detection using two sets of texture algorithms for extracting the essential image features from suspected spliced images.

2 The proposed model

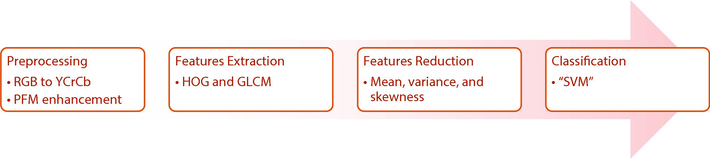

The main objective of this study is to improve the detection of image splicing by using a novel “fractional operators image enhancement” technique (FOIE). Fig. 2 shows the main steps of the proposed model, which include preprocessing (where the RGB image is converted into YCbCr, and then enhanced using the proposed PFM), features extraction, dimension reduction, and classification.

- The proposed model's block diagram.

2.1 Preprocessing

In the proposed model, we rely on preprocessing to ensure a better features extraction process which will improve the accuracy of the algorithm later on.

2.1.1 Chroma space

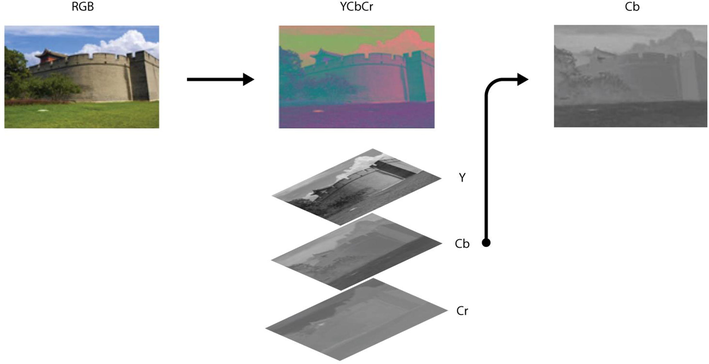

In this step, the input RGB image is converted to the YCbCr color space. The RGB space is the most popular and widely used color space in digital imaging. It simply represents the intensities of the red, green, and blue colors. Although RGB has its own advantages over the other color spaces, it is not suitable for use with splicing detection because in RGB, the correlation between red, green, and blue colors is very high, and the chromatic and achromatic information cannot be differentiated. We previously demonstrated the advantage of using the YCbCr color space in forgery detection through another algorithm (Moghaddasi et al., 2014; Subramaniam et al., 2019). In short, the YCbCr represents the luminance (Y), blue difference (Cb) and red difference (Cr) chrominance components, respectively. We chose Cb in our present study. Fig. 3 shows an RGB image and its YCbCr counterpart with the corresponding channels.

- Illustration of preprocessing of a sample RGB image from the reference dataset. The obtained Cb images are subsequently enhanced by PFM and are then used for feature extraction.

2.1.2 Proposed image enhancement algorithm

Here, we explore new fractional operators to enhance and preserve the fine details of the images for the purpose of image splicing detection. The fractional operator is one of the significant approaches in fractional analysis, which is utilized to measure the fractional structures of images. This approach is widely utilized for resolving complex problems in image processing (Jalab, 2021; Jalab et al., 2017; Jalab et al., 2019). We obtain the generalized fractional image enhancement method by using the fractional operator (Ibrahim, 2011) as follows:

Because the dimension in image processing is commonly considered to be two dimensions, it is convenient to use the two-dimensional parmetric operator as specified in Eq (1). More than, we consider the sample mean which is the arithmetic average of the values of pixels in an image. By applying the discrete formula of Eq. (1), we obtain the following expression for pixel’s fraction mean

For a function ϰ in a fractional set, and v, and ω > 1 then, the mean of image pixels (Δ (ϰ)) satisfies the following:

The image enhanced by the proposed FOIE (Ien) will be the result of the multiplication of the input image I with the mean parameter Δ, as follows:

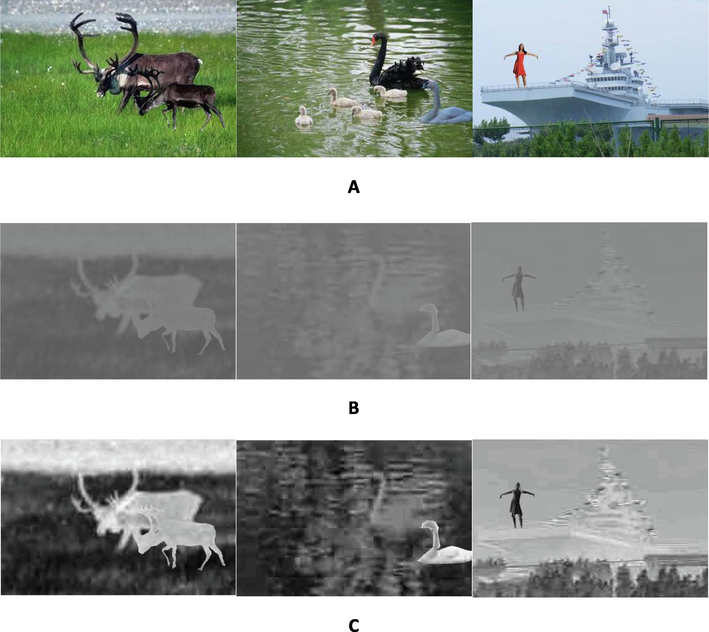

The effects attained by the proposed PFM for a set of sample images are shown in Fig. 4 (C). It is noted from Fig. 4(B) that one may not be able to directly notice the abrupt changes caused by the splicing operation. However, when applying the PFM for enhancement as shown in Fig. 4 (C), the spliced regions become clearer and brighter. It can be seen that the proposed PFM enhances the details and the structure of tampered objects despite the overall noise and blur that affect the images.

- The enhancement results of the proposed PFM. (A) The original spliced images, (B) the Cb converted images, (C) the PFM-enhanced images.

The enhancement of the low visibility regions in the input images is attributed to the ability of PFM to dynamically extract the illumination values from the pixels of the input image. The logic behind using PFM in image enhancement lies in its capability to capture the details of the image according to the pixel’s probability, as well as to capture the high frequency details of an image, irrespective of noise, blur, and distortion created by the forgery operations. This signifies the contribution of PFM in this work. It can be concluded that PFM helps to widen the differences between the pixel values between authentic and tampered images.

In the preprocessing step, the image is broken up into several non-overlapping blocks with size of 16 × 16, and then the pixel intensity occurrences number is calculated. The algorithm for extracting the PFM for each block is based on Eq. (2) and is presented in Algorithm 1.

| Algorithm 1: Pixel’s fractional mean (PFM) |

|---|

|

|

|

|

|

|

|

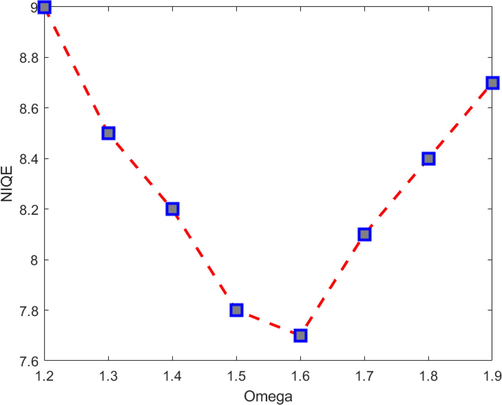

- Showing the relationship between NIQE and ω. The value of the parameter ω is determined based on the lowest NIQE score.

2.2 Feature extraction

Our proposed model is based on the extraction of low dimension features for the detection of image splicing. We primarily deal with the image artifacts that manifest as inconsistencies which can be seen in the tampered image. Specifically, we are concerned with the texture of the image which is altered as a result of the splicing process.

For this study, we considered the effect of the textural features of the tampered regions in forged images, which are represented by the individual pixel’s statistical properties such as the mean, variance, skewness, and kurtosis, in addition to the relation between pixels. For this, we considered the following: “Histogram of Oriented Gradient” (HOG) and “Gray-Level Co-Occurrence Matrix” (GLCM) (Dalal and Triggs, 2005).

-

HOG allows the measurement of the histograms of gradient directions (Dalal and Triggs, 2005). It describes the distribution of the directions of the local pixel intensities in each region of the image. This is done by dividing the image into smaller regions and creating 1-D gradient orientation histogram for each region. The direction and magnitude of the gradient, which are later used to obtain the HOG, are determined from the pixels found inside the given region. 13824 HOG textural features are obtained in total for every Cb image.

-

GLCM characterizes the texture of an image by considering the spatial relationship of pixels. It describes the frequency at which pixel pairs of certain values and spatial relationships occur in a grey-level image via a statistical measure. We used GLCM in the proposed work in four directions which indicate the offset between a pair of pixels. These directions are expressed as degrees (0°, 45°, 90°, 135°) where each one represents the neighborhood of the pixel i. In the present model, the offset is calculated by a pixel-long distance expressed as [0 1; −1 1; −1 0; −1–1] for all of the four directions. Fundamentally, for each element (i,j) in the GLCM matrix, the occurrence of the pixel with value ‘i’ in the direction to the pixel with value ‘j’ in image P is given. We employed GLCM to obtain a measure of the following features: contrast, correlation, energy, and homogeneity, which are described as follows:

The product of Eq. (4) represents the contrast between the given pixel and its neighborhood in the whole image, where it is equal to 0 if the image is constant.

The correlation represents the joint probability occurrence of the given pairs of pixels as formulated in Eq. (5), where µ and σ represent the mean and standard deviation of pixel i and pixel j respectively. The product of the equation specifies the extent to which two neighbors are perfectly correlated. Correlation is always expressed as values within the range of [−1,1] where a completely positively-correlated image has a correlation of +1, and a completely negatively-correlated image has a correlation of −1.

Energy measures the angular second moment of an image. The equation in Eq. (6) represents the sum of squared elements in the GLCM. The values of energy range from 0 to 1, where the energy is equal to 1 if the image is constant.

Homogeneity provides a measure of how close the diagonal distribution of elements is in GLCM. Given in Eq. (7), the homogeneity values range from 0 to 1.

Overall, we extract (4 × 4) GLCM texture features by applying the aforementioned equations. Therefore, 1536 textural features from every Cb image are extracted from the GLCM for the purpose of splicing detection.

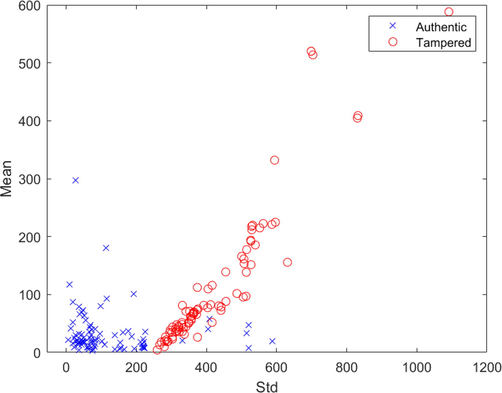

As mentioned previously, textural features are directly affected by the splicing operation such that any disturbances can be detected by the splicing detection algorithm. The distribution of textural features provides a quantitative basis to discriminate between authentic and spliced images. This behavior is illustrated in the scatter plot shown Fig. 6, in terms of the mean and standard deviation. Such plot allows us to demonstrate that the classification classes (i.e., authentic, and tampered) are distinctive, and can indeed be separated. From the figure, it can be noticed that the features are divided into two categories: “authentic” and “tampered”.

- The feature distribution of authentic and spliced images from the reference database.

2.3 Dimension reduction

Reducing the dimensionality of features is needed to be implemented to reduce the computational overhead and resources allocation. This ensures that the algorithm is operating at optimal and efficient settings, which leads to performance gains during the training and testing phases. Dimension reduction is carried out by removing non-essential features from the feature vector. The resulting feature vector would contain only essential features that give the best representation of the image.

In the present work, the mean, variance, and skewness are used to reduce the dimensionality of the data where these values are calculated for each feature set extracted by HOG and GLCM. At the end of feature reduction, the final feature vector would comprise sets of 28 features (12 from HOG and 16 from GLCM). The former is subsequently used by the SVM classifier to conduct the classification step

2.4 The classification

The proposed work employs the SVM classifier which is a well-known and is applied in different applications, such as pattern recognition and classification. The code for this classifier is accessible through MATLAB R2021b (The Mathworks (2021). “Matlab”.).

The SVM is a binary classifier works to divide images into two classes based on a kernel function (RBF). The SVM classification includes the split of the image’s dataset into training and testing.

In this study, 5-fold cross-validation is implemented. The dataset is divided into 5 subsets, and the main method is repeated 5 times, where in each iteration, 70% of the images are used for training, and the remaining 30% are used for testing. Additionally, for each iteration, one of the five subsets is used for testing while the rest are used for training. The setting ensures that every image is used for training and testing, thus eliminating any possible bias that could arise due to manual selection of data.

3 Experimental results and discussion

All the tests and coding were implemented using MATLAB 2021b.

3.1 Image dataset

We used the publicly-available “CASIA V2.0” dataset in our present study (CASIA Tampered Image Detection Evaluation Database (CASIA TIDE v2.0)). This dataset is widely used in splicing detection. The dataset contains 12,614 images in total, of which 7491 (60%) are authentic, and 5123 (40%) are spliced. The images are classified into several categories and are offered in JPG, TIF, BMP image formats.

3.2 Evaluation metrics

Accuracy, true positive rate (TPR), and true negative rate (TNR) metrics were used to evaluate the performance of the proposed model. These metrics were calculated as follows:

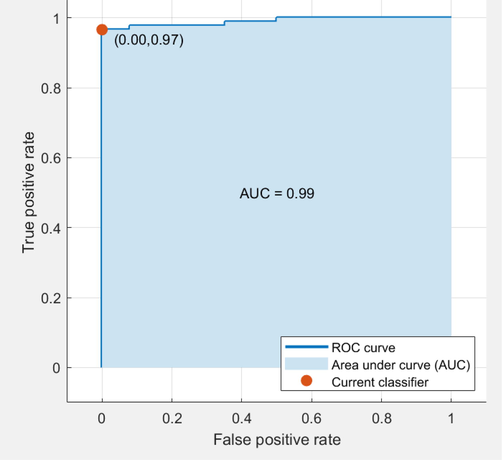

The results reveal that the proposed method is capable of achieving TPR, TNR, and accuracy rates of 98.80%, 98%, and 98.40% respectively with 28-D vector obtained from the CASIA V2.0 dataset (see Table 1). Another way to validate the classification results is using the “reception operating characteristic” (ROC) curve and its score (“area under the curve” AUC). This curve presents the classification performance at all thresholds by plotting the TP and FP rates. We constructed an ROC curve for the proposed model with the CASIA V2.0 dataset as shown in Fig. 7. From the figure, the AUC is equal to 0.99 (higher is better), which indicates better and improved separation of the classification classes.

| Feature Extraction Methods | Features Dimensions | TPR (%) | TNR (%) | Accuracy (%) |

|---|---|---|---|---|

| Moghaddasi et al. (2014) Cb color space | 50 | 87.97 | 93.51 | 90.74 |

| Jaiprakash et al. (2020) Cr color space | 212 | 89.10 | 90.90 | 89.50 |

| Li et al. (2017) | 972 | 49.00 | 93.00 | 92.38 |

| El-Latif et al. (2019) | 1024 | 96.00 | 96.45 | 96.36 |

| Subramaniam et al. (2019) CbCr color space | 48 | 99 | 96 | 97.90 |

| Proposed Method Cb | 28 | 98.80 | 98.00 | 98.40 |

- The ROC curve for the proposed model using the CASIA V2.0.

3.3 Comparison with other image splicing detection algorithms

The proposed algorithm's performance was compared to those of similar state-of-the-art splicing detection approaches to verify its robustness. These include the following: Moghaddasi et al (2014) applied the “run length run number” algorithm along with PCA and kernel PCA for feature reduction to detect image splicing. This method achieved an accuracy rate of 93.80% with 100-D feature vector. Jaiprakash et al (2020) utilized image statistics and pixel correlation obtained from DCT and DWT domains to detect splicing and copy-move forgeries. The achieved accuracy by this method was 89.5% with 212-D feature vector. Li et al. (2017) employed Markov feature vector in quaternion discrete cosine transform to detect image splicing through the use of SVM. Their method achieved an accuracy rate of 92.38% with feature dimension of 972. El-Latif et al. (2019) proposed an algorithm for the detection of image splicing using deep learning with 1024-D vector for an automated generation of features from colored images. Despite the high accuracy of the method, which is comparable to ours, deep learning requires large overhead and thorough datasets, with limited hardware support. This can be considered as a disadvantage when aiming to develop an efficient algorithm.

Table 1 shows the results of the relative comparison between our method and the referenced state-of-the-art methods (El-Latif et al., 2019; Jaiprakash et al., 2020; Li et al., 2017; Moghaddasi et al., 2014; Subramaniam et al., 2019).

The experimental results showed that the proposed method achieved the highest splicing detection results, which showed how good the proposed method in detecting the spliced and authentic images. Moreover, the (Subramaniam et al., 2019) achieved the second highest accuracy among the five other methods.

The results shown in the table confirm that the proposed method achieves the highest accuracy with the lowest number of feature dimensions. This attest to the efficacy and reliability of the method, and further proves the benefit of using fractional calculus in image processing.

4 The limitations

-

The proposed image enhancement process is dependent upon the value ω, which is determined experimentally.

-

The limitation of the proposed method of image splicing detection is associated with the feature dimension reduction which can lead to reduce the detection accuracy due to data loss of extracted image features.

5 Conclusion

We proposed a novel PFM algorithm to enhance the quality of images in a new approach to improve the accuracy of the image splicing detection. The proposed PFM aims to preserve low-frequency features in the smooth regions of the image while still enhancing the details of textures. We extracted the texture features using both GLCM and HOG approaches to obtain features that represent the manipulations done to the image. Feature reduction was implemented to improve the overall efficiency of the method. The proposed method demonstrated an accuracy rate of 98.40% with feature dimension of 28 when assessed using the CASIA V2.0 dataset. This proves that the method can provide accurate identification of spliced images. The proposed method is advantageous over similar methods in the sense that it achieves high classification accuracy with the lowest number of feature dimensions. Future works may aim to utilize the present algorithm to detect other types of image forgeries.

Funding

This research is supported by Faculty Program Grant (GPF096C-2020), University of Malaya, Malaysia.

Conflict of interests

The authors declare no conflict of interests regarding the publication of this article.

Author contributions

All authors contributed equally to the production of this manuscript.

References

- Image splicing detection based on texture features with fractal entropy. CMC-Computers Mater. Continua. 2021;69(3):3903-3915.

- [Google Scholar]

- A deep learning approach to universal image manipulation detection using a new convolutional layer. In: Proceedings of the 4th ACM Workshop on Information Hiding and Multimedia Security. 2016.

- [Google Scholar]

- CASIA Tampered Image Detection Evaluation Database (CASIA TIDE v2.0). http://forensics.idealtest.org:8080/index_v2.html.

- Histograms of oriented gradients for human detection. In: 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR'05). 2005.

- [Google Scholar]

- A passive approach for detecting image splicing using deep learning and Haar wavelet transform. Int. J. Comput. Network Inf. Security. 2019;11(5):28-35.

- [Google Scholar]

- Hamid A. Jalab, A. a. R. A.-S., Hadil Shaiba, Rabha W. Ibrahim, Dumitru Baleanu. (2021). Fractional Rényi Entropy Image Enhancement for Deep Segmentation of Kidney MRI. CMC- Materials & Continua, 6, 2061–2075. 10.32604/cmc.2021.015170.

- On generalized Srivastava-Owa fractional operators in the unit disk. Adv. Difference Equations. 2011;2011(1):1-10.

- [Google Scholar]

- A medical image enhancement based on generalized class of fractional partial differential equations. Quant Imaging Med. Surg.. 2022;12(1):172-183.

- [CrossRef] [Google Scholar]

- Low dimensional DCT and DWT feature based model for detection of image splicing and copy-move forgery. Multimedia Tools Appl.. 2020;79(39):29977-30005.

- [Google Scholar]

- Image denoising algorithm based on the convolution of fractional Tsallis entropy with the Riesz fractional derivative. Neural Comput. Appl.. 2017;28(1):217-223.

- [CrossRef] [Google Scholar]

- New texture descriptor based on modified fractional entropy for digital image splicing forgery detection. Entropy. 2019;21(4):371.

- [Google Scholar]

- Digital image security using digital watermarking. Int. Res. J. Eng. Technol.. 2018;5(3):163-166.

- [Google Scholar]

- Image splicing detection based on Markov features in QDCT domain. Neurocomputing. 2017;228:29-36.

- [Google Scholar]

- The Mathworks, (2021). “Matlab”. In.

- Improving RLRN image splicing detection with the use of PCA and Kernel PCA. Sci. World J.. 2014;2014:1-10.

- [Google Scholar]

- Riesz fractional based model for enhancing license plate detection and recognition. IEEE Trans. Circuits Syst. Video Technol.. 2018;28(9):2276-2288.

- [Google Scholar]

- Deep learning local descriptor for image splicing detection and localization. IEEE Access. 2020;8:25611-25625.

- [Google Scholar]

- Fractional poisson enhancement model for text detection and recognition in video frames. Pattern Recognition. 2016;52:433-447.

- [Google Scholar]

- State of the art in passive digital image forgery detection: copy-move image forgery. Pattern Anal. Appl.. 2018;21(2):291-306.

- [Google Scholar]

- A natural image model approach to splicing detection. In: Proceedings of the 9th Workshop on Multimedia & Security. 2007.

- [Google Scholar]

- Improved image splicing forgery detection by combination of conformable focus measures and focus measure operators applied on obtained redundant discrete wavelet transform coefficients. Symmetry. 2019;11(11):1392.

- [Google Scholar]

- Zhang, J., Zhao, Y., Su, Y. (2009). A new approach merging Markov and DCT features for image splicing detection. IEEE International Conference on, Intelligent Computing and Intelligent Systems, 2009. ICIS 2009.

- Image enhancement algorithm using adaptive fractional differential mask technique. Math. Found. Comput.. 2019;2(4):347.

- [Google Scholar]

- Image-splicing forgery detection based on local binary patterns of DCT coefficients. Security Commun. Networks. 2015;8(14):2386-2395.

- [Google Scholar]